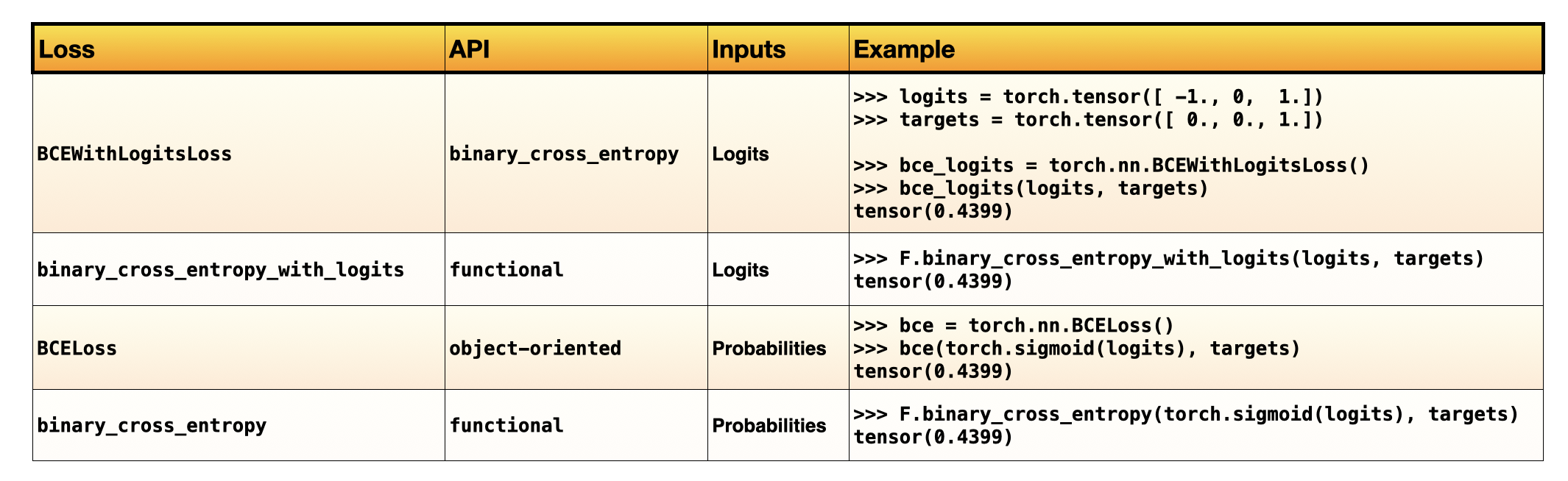

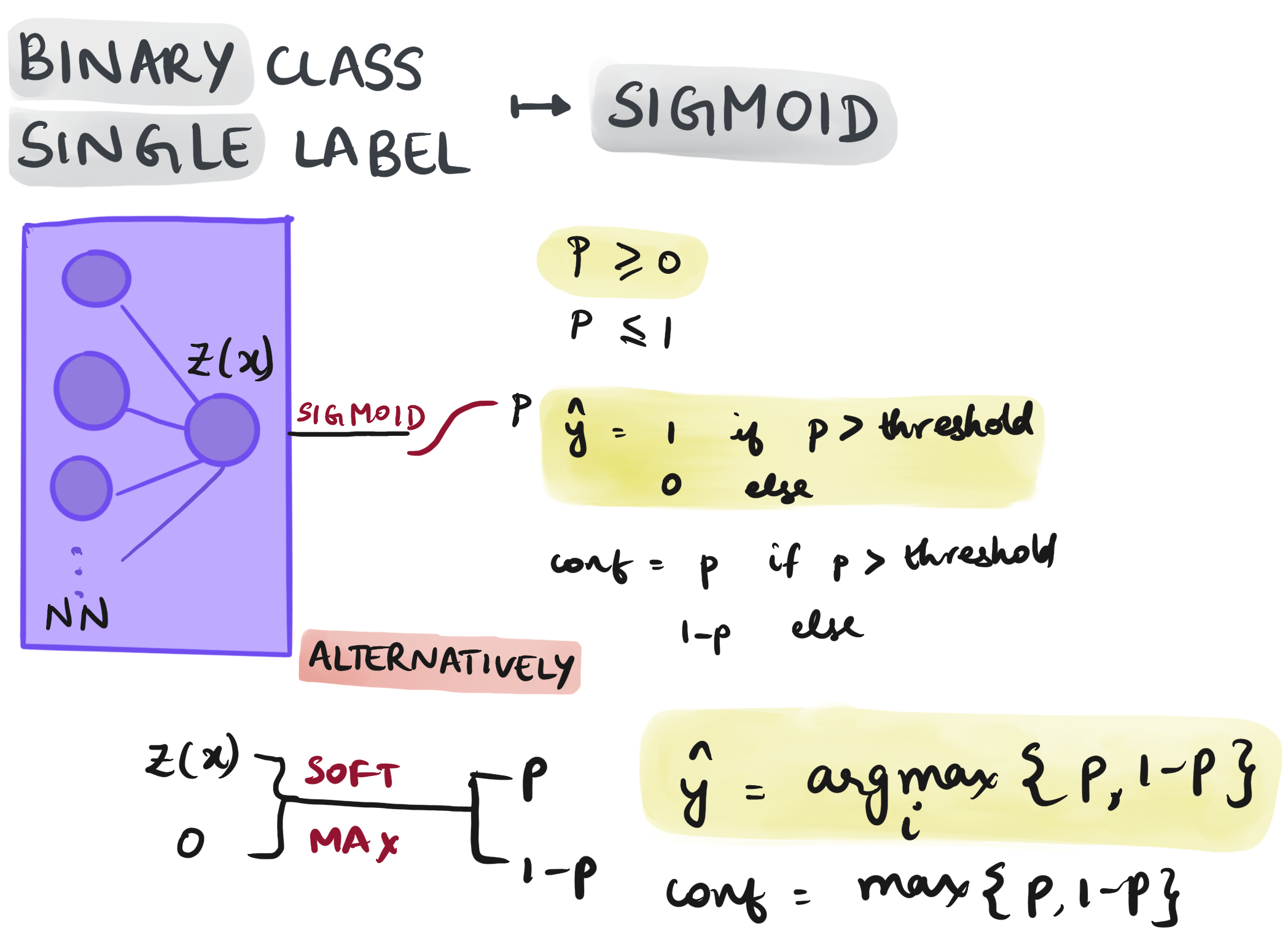

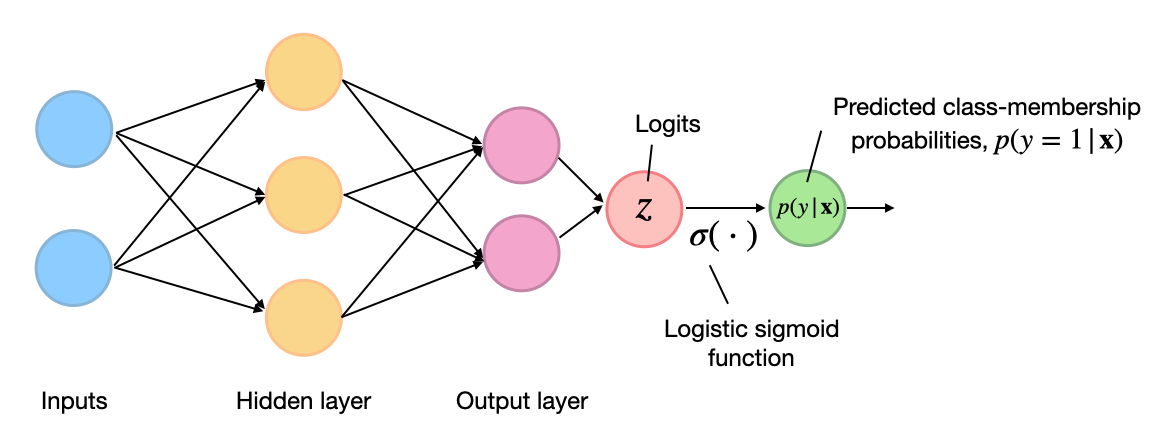

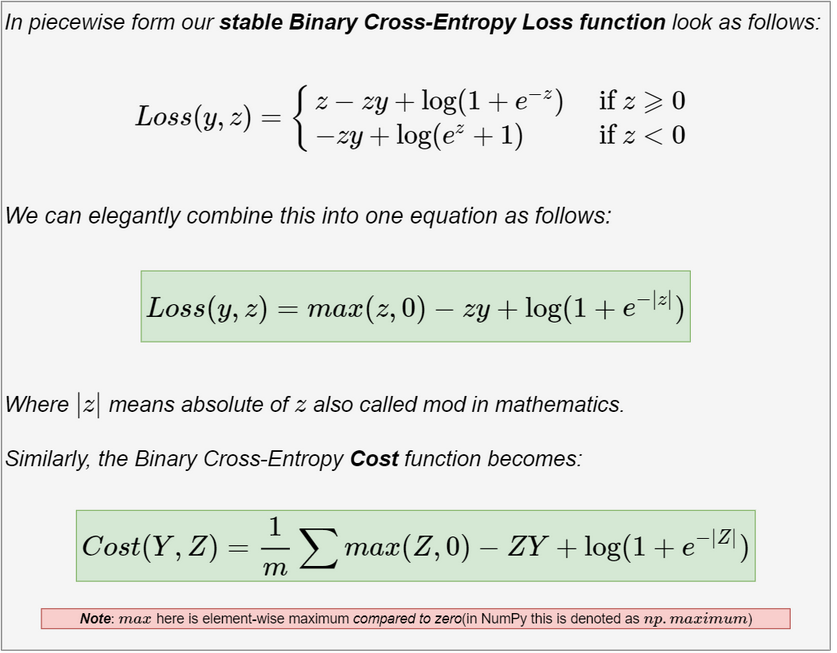

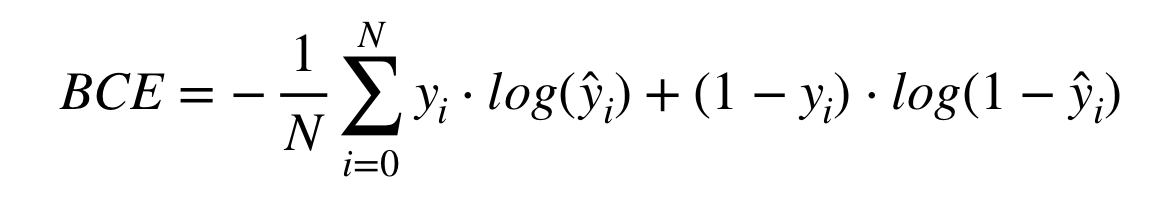

How do Tensorflow and Keras implement Binary Classification and the Binary Cross-Entropy function? | by Rafay Khan | Medium

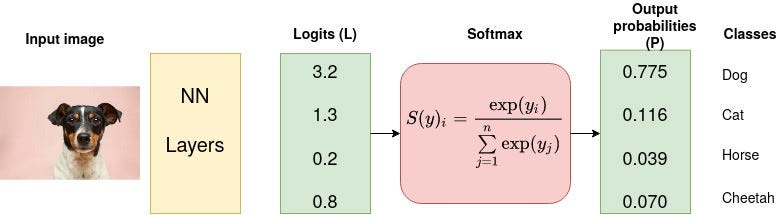

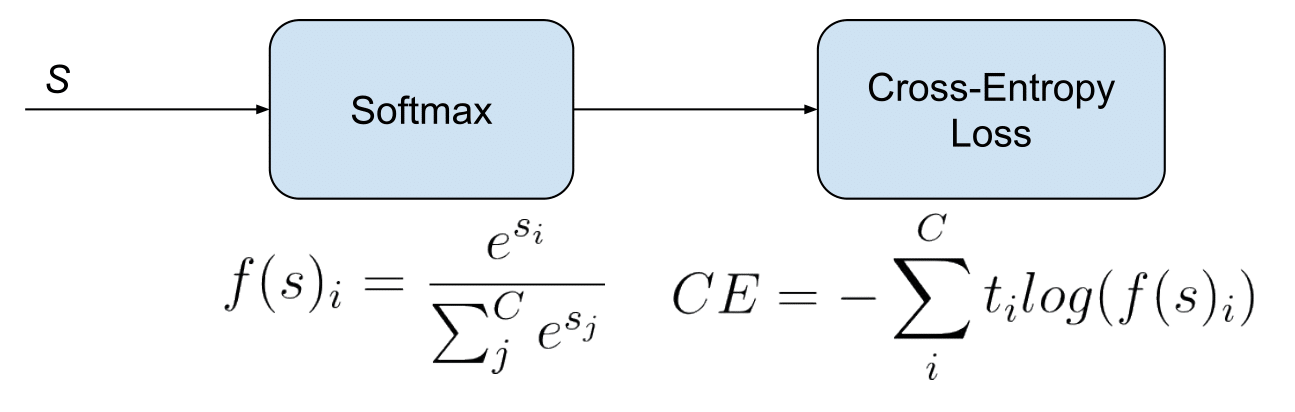

Understanding Categorical Cross-Entropy Loss, Binary Cross-Entropy Loss, Softmax Loss, Logistic Loss, Focal Loss and all those confusing names

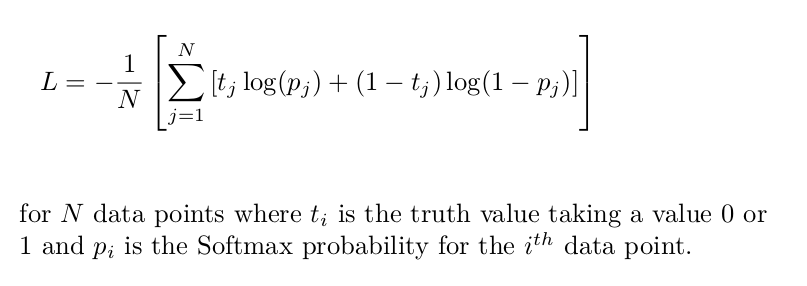

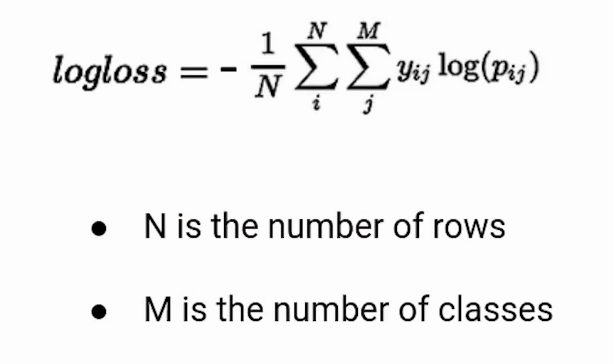

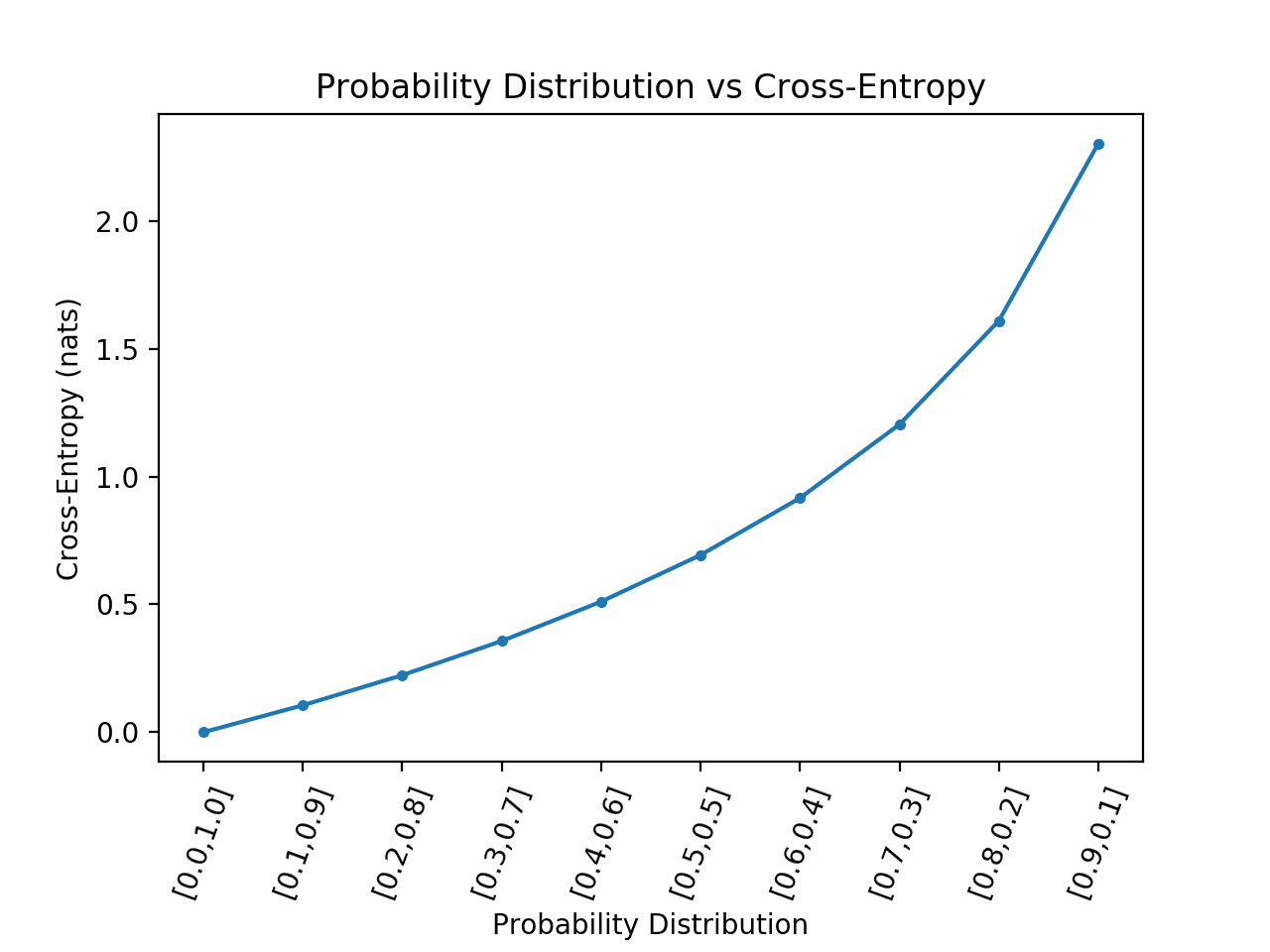

Cross-Entropy Loss Function. A loss function used in most… | by Kiprono Elijah Koech | Towards Data Science

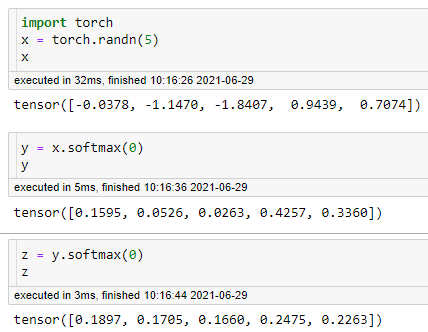

machine learning - Cross Entropy in PyTorch is different from what I learnt (Not about logit input, but about the loss for every node) - Cross Validated

Understanding PyTorch Loss Functions: The Maths and Algorithms (Part 2) | by Juan Nathaniel | Towards Data Science